AI is Transforming Customer Satisfaction – A Deep Dive with Spring AI and OpenAI

When Customer Service Fails: A Personal & Industry-Wide Problem

I recently had a frustrating experience with one of the largest charger providers in the U.S. I shared my full experience and insights in this post. Despite being a market leader, their customer service was slow, dismissive, and unhelpful. What should have been a simple resolution dragged on for weeks.

This isn’t just a one-time bad experience—it’s a widespread issue in customer service. While working at AWS, I frequently reviewed unresolved support tickets and noticed the same pattern at scale. Many tickets remained open not because they weren’t important, but because there was no effective way to measure whether conversations were actually moving toward resolution. Worse, frustrated customers often leave silently, without giving feedback—resulting in lost business before companies even realize there was a problem.

The real problem? There was no automated way to evaluate conversation health—whether the customer’s issue was moving toward resolution or if they were stuck in an endless loop of responses. Without AI-driven analysis, companies struggle to detect when a conversation is losing clarity, sentiment is declining, or the issue remains unresolved despite multiple exchanges.

Most customer service teams rely on manual tracking of ticket progress, often missing key indicators of customer frustration and unresolved issues. As a result, support teams may believe they are addressing problems effectively, while customers feel unheard and dissatisfied.

This is exactly where AI can make a difference—by analyzing conversation sentiment, clarity, and resolution progress, AI can track whether customer concerns are truly being addressed and highlight interactions that require escalation before frustration builds up. Instead of just classifying tickets, the focus shifts to ensuring that every conversation moves efficiently toward resolution and customer satisfaction.

How AI Can Solve This Problem with Spring AI and OpenAI

To address these challenges, I’ll walk through how to use Spring AI with OpenAI to build an AI-driven solution. This approach can be applied across various domains where timely and accurate responses are crucial, such as customer support, helpdesk automation, incident management, and beyond.

In the next section, I’ll cover:

✅ How to set up Spring AI with OpenAI

✅ How to configure AI-powered responses

✅ How AI can evaluate conversation health and detect customer frustration

This hands-on guide will demonstrate how AI can transform customer service operations and improve user satisfaction. Let’s dive into the implementation. 🚀

How to Set Up Spring AI with OpenAI

To bring AI-powered automation into FlowInquiry, we will use Spring AI, a Spring Boot project designed to integrate AI models

like OpenAI’s GPT efficiently. This setup will allow us to process customer inquiries, analyze sentiment, and generate intelligent

responses.

1. Adding Spring AI Dependencies

First, we need to include the Spring AI dependencies in our build.gradle file:

dependencies {

api(libs.bundles.spring.ai)

}

we keep all spring ai dependencies in the gradle version catalog file, as you see we do not only use the open ai but also ollama, but let focus into open ai in this article

[versions]

springAiVersion="1.0.0-M5"

[libraries]

spring-ai-openai = {module = "org.springframework.ai:spring-ai-openai-spring-boot-starter", version.ref="springAiVersion"}

spring-ai-ollama = {module = "org.springframework.ai:spring-ai-ollama-spring-boot-starter", version.ref="springAiVersion"}

[bundles]

spring-ai = ["spring-ai-openai", "spring-ai-ollama"]

2. Configuring OpenAI API in Spring Boot

We configure Spring AI in application.yaml or application.properties to enable its functionality

spring:

ai:

openai:

api-key: ${OPEN_AI_API_KEY}

chat:

options:

model: ${OPEN_AI_CHAT_MODEL}

Ensure you store API securely. In FlowInquiry, we use dotenv-java to load these environment variables from the local .env file.

# API key for authenticating with OpenAI services.

# Critical for accessing OpenAI's APIs. Store it securely.

OPEN_AI_API_KEY=xxx

# Specifies the OpenAI model to use for processing requests.

# Example: gpt-3.5-turbo, gpt-4-turbo, etc.

OPEN_AI_CHAT_MODEL=gpt-4-turbo

3. Develop the Chat service wrapper for AI chat model and exposing as an API Endpoint

Spring AI provides an abstraction for chat models through the org.springframework.ai.chat.model.ChatModel interface.

While models like OpenAiChatModel and OllamaChatModel can be used directly, FlowInquiry aims to offer a flexible solution where

customers can choose their preferred chat model.

public interface ChatModelService {

String call(String input);

String call(Prompt prompt);

}

The OpenAI implementation service enables OpenAiChatModelService only if both environment variables,

OPEN_AI_API_KEY and OPEN_AI_CHAT_MODEL, are set.

@ConditionalOnProperty(

name = {"OPEN_AI_CHAT_MODEL", "OPEN_AI_API_KEY"},

matchIfMissing = false)

public class OpenAiChatModelService implements ChatModelService {

private final OpenAiChatModel openAiChatModel;

public OpenAiChatModelService(OpenAiChatModel openAiChatModel) {

this.openAiChatModel = openAiChatModel;

}

@Override

public String call(String input) {

return openAiChatModel.call(input);

}

@Override

public String call(Prompt prompt) {

ChatResponse response = openAiChatModel.call(prompt);

Generation generation = response.getResult();

return (generation != null) ? generation.getOutput().getText() : "";

}

}

and OllamaChatModelService

@Service

@ConditionalOnProperty(

name = {"OLLAMA_CHAT_MODEL", "OLLAMA_API_KEY"},

matchIfMissing = false)

public class OllamaChatModelService implements ChatModelService {

//...

}

We configure the system to use only one chat model at a time. In FlowInquiry, Ollama is preferred over OpenAI when both are available.

@Configuration

public class ChatModelConfiguration {

@Bean

@Primary

@ConditionalOnBean({OllamaChatModelService.class, OpenAiChatModelService.class})

public ChatModelService chatModel(

Optional<OllamaChatModelService> ollamaChatModelService,

Optional<OpenAiChatModelService> openAiChatModelService) {

if (ollamaChatModelService.isPresent()) {

return ollamaChatModelService.get();

} else if (openAiChatModelService.isPresent()) {

return openAiChatModelService.get();

}

// If no chat models are present, this block won't execute due to @ConditionalOnBean

return null;

}

}

With ChatModelService now ready, this article demonstrates how to use OpenAI for evaluating customer satisfaction

by setting OPEN_AI_API_KEY and OPEN_AI_CHAT_MODEL. In the next article, we will explore how Ollama

performs in comparison.

How AI can evaluate conversation health and detect customer frustration

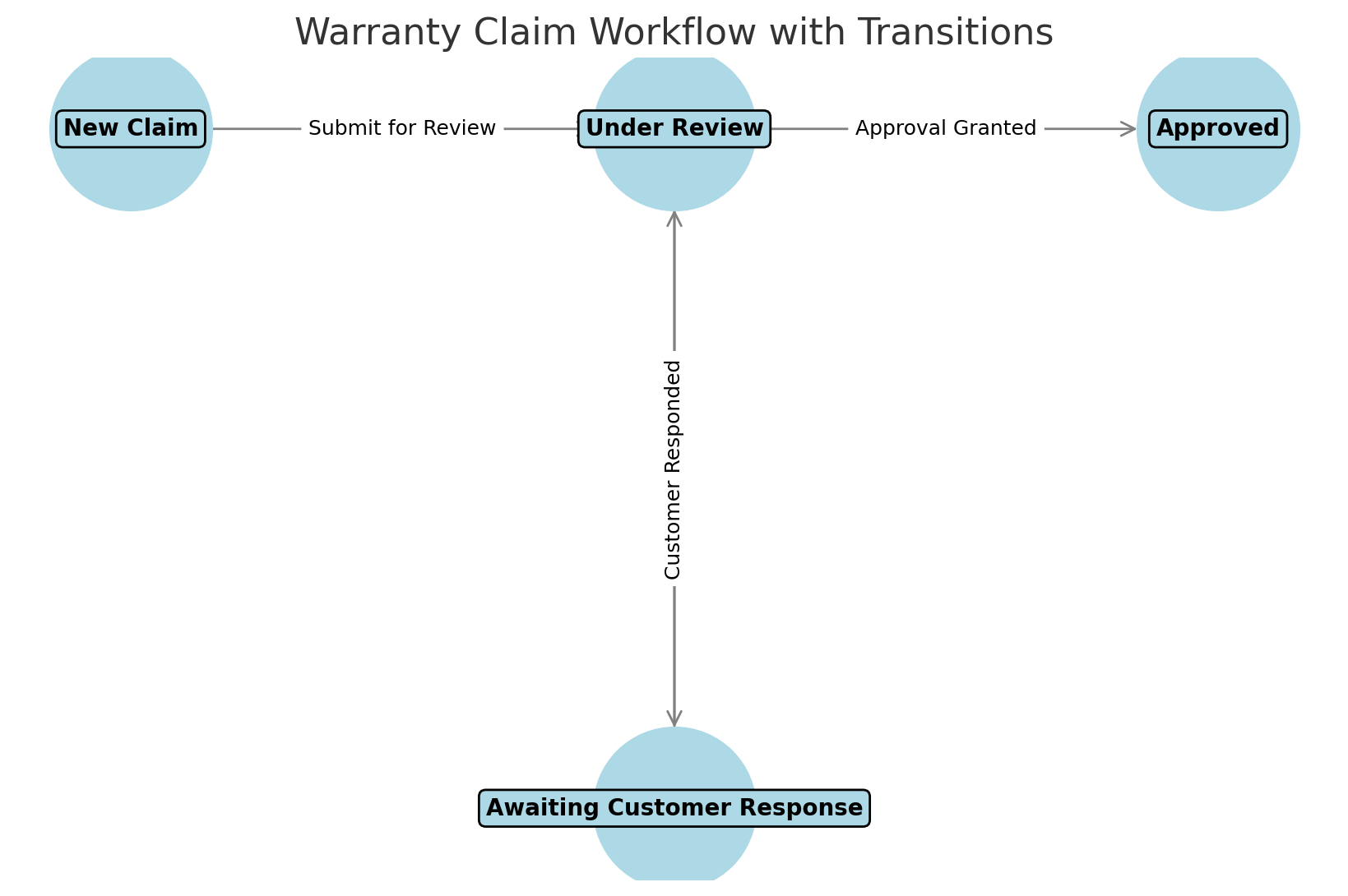

In FlowInquiry, users can create issues by providing a title and description. Once created, both customers and team members can engage in discussions through conversations. Additionally, the ticket progresses through various states based on the workflow associated with the ticket.

For example, consider a warranty claim process:

- A customer submits a ticket for a warranty claim.

- Team members review the claim and request additional information if needed.

- The conversation between the customer and the team unfolds through comments.

- The ticket transitions through different workflow states, such as:

New Claim→ Initial stage when the customer submits the claim.Under Review→ The support team examines the claim details.Awaiting Customer Response→ The team requests additional information.Approved→ The claim is approved and resolved.

For simplicity, this example does not cover all possible states in the warranty claim process, such as

Processing Replacement/Repair or Claim Rejected. The focus is on illustrating how AI can enhance conversation health within

a structured workflow.

Using AI to Evaluate Conversation Health

To ensure a smooth customer experience, AI continuously analyzes conversations by tracking sentiment, clarity, and resolution success.

Sentiment is measured by evaluating the tone of messages, assigning a score (S) between 0 and 1, where 0 represents negative sentiment and 1 represents positive sentiment. Clarity is assessed based on how many customer questions are effectively resolved compared to the total questions asked. Resolution success is determined by checking whether a message resolves an issue in the conversation.

The final conversation health score (H) is computed using a weighted formula:

H = 0.4 * S + 0.4 * C + 0.2 * R

where:

- S is the cumulative sentiment score

- C is the clarity score

- R is the resolution success indicator.

This ensures that sentiment and clarity contribute the most (40% each) while issue resolution has a moderate impact (20%). AI continuously updates this score, giving teams a real-time view of customer interactions and helping them act before issues escalate.

1. Calculate the sentiment

We use OpenAI to analyze the sentiment of a new ticket request or comment, assigning a score between 0 and 1. To ensure deterministic results, rather than random fluctuations, we explicitly set the temperature value to 0.1.

The temperature parameter controls the level of randomness in AI-generated responses. A higher temperature (e.g., 0.8 - 1.0) produces more varied and creative answers, while a lower temperature (e.g., 0.0 - 0.2) ensures more consistent and predictable outputs. Since sentiment analysis requires accuracy and repeatability, we set temperature = 0.1 to minimize randomness and ensure the AI provides a stable sentiment score for each evaluation.

The implementation is as follows:

private float evaluateSentiment(String newMessage) {

// Use OpenAI to analyze sentiment

String response =

chatModelService.call(

new Prompt(

"Evaluate the sentiment of this message and return a score between 0 and 1. " +

"Provide only the number: "

+ newMessage,

OpenAiChatOptions.builder()

.temperature(0.1)

.maxCompletionTokens(10)

.build()));

// Extract the sentiment score from the response (assuming response contains a parsable

// float)

try {

return Float.parseFloat(response.trim());

} catch (NumberFormatException e) {

throw new IllegalStateException(

"Unable to parse sentiment score from OpenAI response: " + response);

}

}

To ensure that sentiment analysis accurately reflects the customer’s experience, we assign a higher weight to customer responses than agent messages. This prevents agents from artificially inflating the sentiment score by adding positive comments.

The cumulative sentiment score is updated using the following formula:

Cumulative Sentiment (CS) = [(Previous CS × (T - 1)) + (S × W_s)] / T

where:

CSis the cumulative sentiment score.Sis the sentiment score of the latest message.Tis the total number of messages in the conversation.W_sis the sentiment weight, set to 1.5x for customer responses and 1.0x for agent responses.

the above formula is reflected in the code

float sentimentWeight = isCustomerResponse ? 1.5f : 1.0f;

health.setCumulativeSentiment(

(health.getCumulativeSentiment() * (health.getTotalMessages() - 1)

+ sentimentScore * sentimentWeight)

/ health.getTotalMessages());

2. Evaluate if customers raise a new question

We have a prompt to AI model to evaluate if customer raises a new question

private boolean isQuestion(String message) {

String aiPrompt =

"Determine if the following message is a question. Respond with 'true' or 'false':\n\nMessage: "

+ message;

String aiResponse = chatModelService.call(aiPrompt);

return Boolean.parseBoolean(aiResponse.trim());

}

3. Evaluate if some question is resolved and calculating the clarity score

The clarity score is determined by the ratio of resolved questions to total questions in a conversation. It provides insight into how effectively customer inquiries are being addressed.

C = Q_r / Q_t

where:

- Q_r represents the number of resolved questions

- Q_t is the total number of questions asked by the customer.

Each time an agent responds, AI evaluates whether any previously raised questions have been resolved. This ensures that the clarity score dynamically reflects how well the conversation is progressing, helping identify interactions where customer concerns remain unanswered.

private boolean determineIfResolved(String message) {

String aiPrompt =

"Does the following message indicate that the issue has been resolved? Respond with 'true' or 'false':\n\nMessage: "

+ message;

String aiResponse = chatModelService.call(aiPrompt);

return Boolean.parseBoolean(aiResponse.trim());

}

4. Weighting Conversation Health by Ticket Priority

Not all tickets are treated equally—critical tickets require immediate attention. If a high-priority ticket has poor conversation health, its impact should be amplified to ensure it gets noticed by the right people. This ensures that unresolved critical issues don’t go unnoticed, ultimately improving team performance.

To achieve this, we apply a priority-based weight to the conversation health score, allowing adjustments based on specific requirements. This ensures that urgent tickets receive the necessary focus while maintaining flexibility for different priorities. 🚀

private float getPriorityWeight(TeamRequestPriority priority) {

switch (priority) {

case Critical: return 1.5f;

case High: return 1.2f;

case Medium: return 1.0f;

case Low: return 0.8f;

case Trivial: return 0.6f;

default: return 1.0f; // Default to neutral weight

}

}

Finally, we adjust the conversation health score based on ticket priority, ensuring that critical issues receive the necessary attention. To prevent excessive impact, we apply a weight but cap the final value at 1, maintaining a balanced and fair evaluation. This ensures that high-priority tickets are amplified appropriately without distorting the overall conversation health metric.

health.setConversationHealth(

Math.min(1.0f, priorityWeight * (

(0.4f * health.getCumulativeSentiment()) +

(0.4f * weightedClarityScore) +

(0.2f * (resolvesIssue ? 1.0f : 0.0f))

))

);

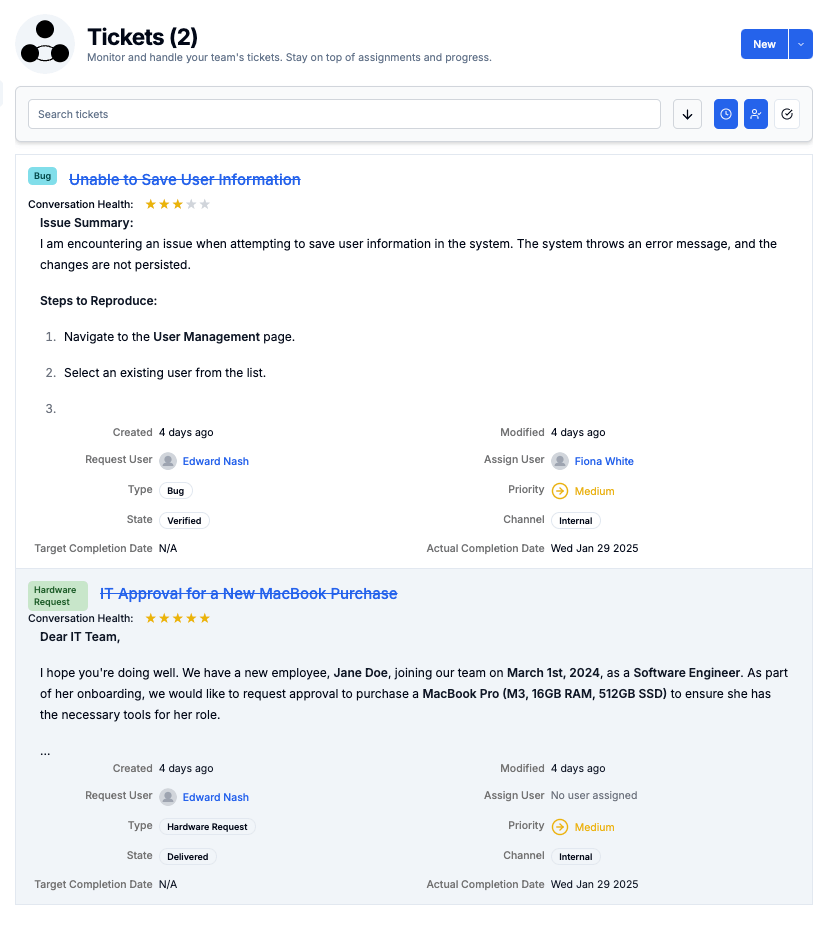

5. Classify tickets per conversation health

After calculating the conversation health of the ticket, the score ranges from 0 to 1, where:

| Score Range | Health Level |

|---|---|

| ≥ 0.8 | Excellent |

| > 0.6 | Good |

| > 0.4 | Fair |

| > 0.2 | Poor |

| ≤ 0.2 | Critical |

The classification logic is implemented as follows:

public TicketHealthLevel getHealthLevel() {

if (conversationHealth >= 0.8) {

return TicketHealthLevel.EXCELLENT;

} else if (conversationHealth > 0.6) {

return TicketHealthLevel.GOOD;

} else if (conversationHealth > 0.4) {

return TicketHealthLevel.FAIR;

} else if (conversationHealth > 0.2) {

return TicketHealthLevel.POOR;

} else {

return TicketHealthLevel.CRITICAL;

}

}

Once the conversation health is classified, the front-end can visually represent the health status for each customer ticket. This allows support teams to quickly assess ticket priority and focus on resolving critical issues first.

Key Takeaways & What’s Next

Evaluating conversation health is a complex challenge. In this article, we do not cover additional considerations such as:

- Recalculating conversation health when the ticket priority changes.

- Adjusting conversation health if the ticket reaches a workflow stage SLA.

- Automating responses or escalating ticket priority based on conversation context.

- Adjust priority based on conversation health. If the score falls too low, escalate the issue by notifying the manager to ensure a swift resolution.

These aspects require deeper analysis and can be explored in future FlowInquiry revisions.

If you’re interested in the code implementation, you can check out how FlowInquiry handles conversation health in its open-source edition:

🔗 FlowInquiry Open Source Repository

In some cases, OpenAI may not be the best choice for high-volume helpdesk systems managing numerous tickets and conversations. Frequent API calls can lead to high costs, and not all AI tasks require the advanced

intelligence level of OpenAI. Additionally, using a public API might not be suitable for internal enterprise data due to privacy concerns, and it does not allow you

to train the model based on your specific application context.

To address these challenges, you can leverage other AI models supported by Spring AI, such as Ollama, which enables you to run LLMs (Large Language Models) like Mistral, Llama 2, and Code Llama** locally.

This eliminates the need to send data to external services, offering better cost efficiency, improved data security, and full control over AI processing while maintaining AI-powered capabilities.

In upcoming articles, we will explore how to integrate Ollama for conversation health calculation and discuss the potential for training custom models to better align with specific business needs.